What's happening in the round 5 of PlonK? posted June 2023

Someone was asking the following question on [Plonk}():

If you also looked at Plonk and wanted and were wondering the same, here's a short answer. If you do not care about PlonK, feel free to ignore this post. If you care about PlonK, but are starting from the very beginning, then just check my series of videos on PlonK.

First, there's different things going on in this picture. The best way to understand what's going on is to understand them individually.

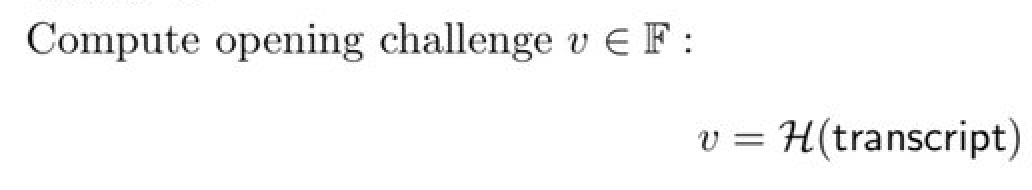

The first step is a random challenge from the verifier in the interactive version of the protocol. Since we're in the non-interactive version, we've replaced the messages of the verifier by calls to a random oracle. This technique to convert an interactive protocol into a non-interactive one is very famous and used all over the place, it's called Fiat-Shamir.

Because we rely on a random oracle, proofs have to state that they are in the random oracle model, and some people don't like that too much because in the real world you end up instantiating these random oracles with hash functions (which are non-ideal constructions). So some people like protocols better when they don't rely on random oracles. In practice, if your hash function is thought to behave like a random oracle (e.g. SHA-3), then you're all good.

Fiat-Shamir'ing an interactive protocol only works if the protocol is a public-coin protocol, which PlonK is. Public-coin here means that the messages of the verifier are random values (coin tosses) that are public (outsiders can look at them and this won't affect the security of the protocol).

Another interesting point in that picture is that we're hashing the whole transcript, which is something you need to do in order to avoid a large class of ambiguity attacks. Protocols often forget to specify this correctly and a number of attacks have been found on PlonKish protocols due to that. See Weak Fiat-Shamir Attacks on Modern Proof Systems for more detail.

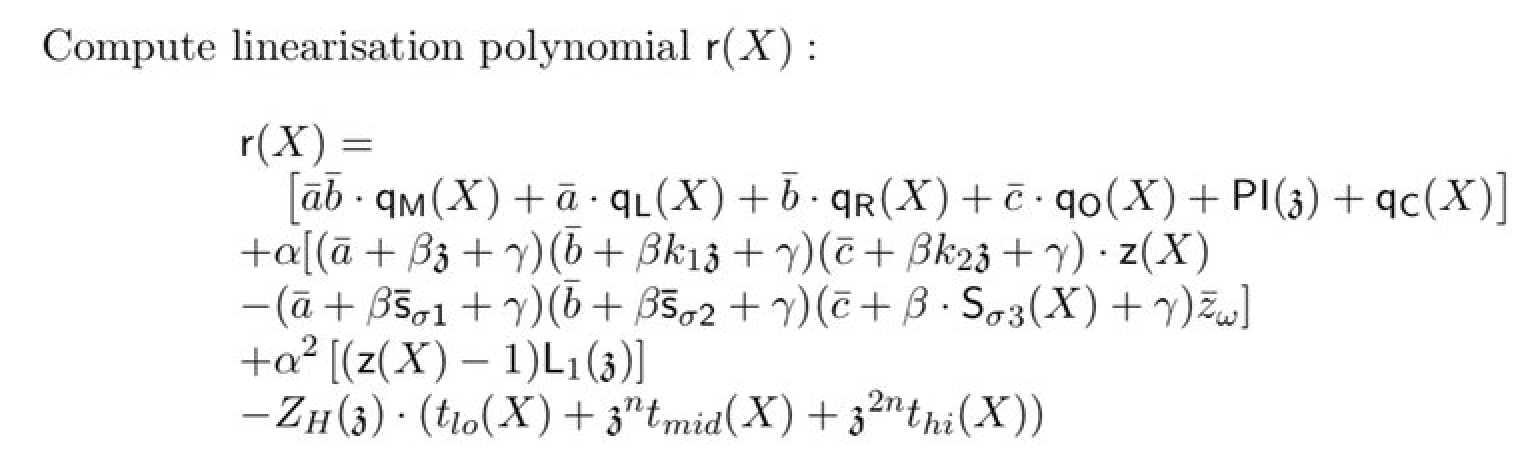

The second step is to compute the linearization of the composition polynomial. The composition polynomial is a term I stole from STARKs but I like it. It's THE polynomial, the one that combines all the checks. In PlonK this means the polynomial that combines checks for all the gates of the circuits and the wiring (permutation).

I'm not going to explain too much about the linearization because I already have a post on it here. But to recap, linearizing is when you evaluate parts of your polynomial. So anything that's evaluated at $z$ is linearized. And anything that has a bar above it (e.g. $\bar{a}$) is linearized as well. Note that the prover could evaluate everything if they wanted to, which would let the verifier compute the entire check "in the clear". But doing that means that the proof is larger, and there are more evaluation proofs to aggregate. It's a tradeoff, that might pay off if you also want to implement recursive zero-knowledge proofs (which require a verifier implemented in a circuit).

We're looking at the prover side in this picture. While the verifier does symmetrical things to the prover, the prover's job here is to form the composition polynomial and to prove that it evaluates to 0 on a number of points (or that it "vanishes on some domain"). So to do that, it has to prove that it is equal to the vanishing polynomial $Z_H$ times some quotient $t(X)$. If you don't understand that, you can read this other post I have on the subject.

The final piece of the puzzle to understand that equation is that we can simplify the $f(X) = Z_H(x) t(x)$ check using Maller's optimization which I talk about here. This is why we subtract our composition polynomial with $Z_H(X) \cdot t(X)$ and this is also why we linearize the vanishing polynomial by evaluating it at $Z_H(z)$.

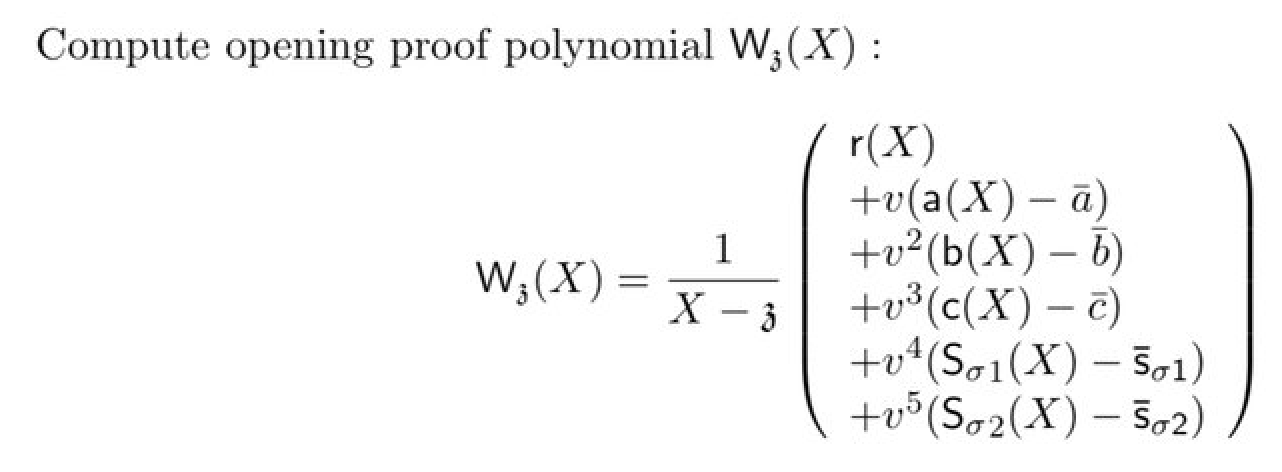

Once we have formed the polynomial which checks that the composition polynomial is equal to the vanishing polynomial times some quotient ($f = Z_H \cdot t$) then we have to evaluate this at a random point. We already know the random point $z$, which we've already used to evaluate some parts of the polynomial (during the linearization). The equation you see in the picture is how you compute a KZG evaluation proof. If you don't know that you can check my article on KZG.

Note also that there are many evaluation proofs that are aggregated together using a random linear combination. This is a common technique to aggregate multiple KZG evaluation proofs (and the verifier will have to compute the same random linear combination on the other side to verify the aggregation). In order to be more efficient (at the cost of tiny amount of security loss) we use 6 powers of $v$ instead of using 6 random values.

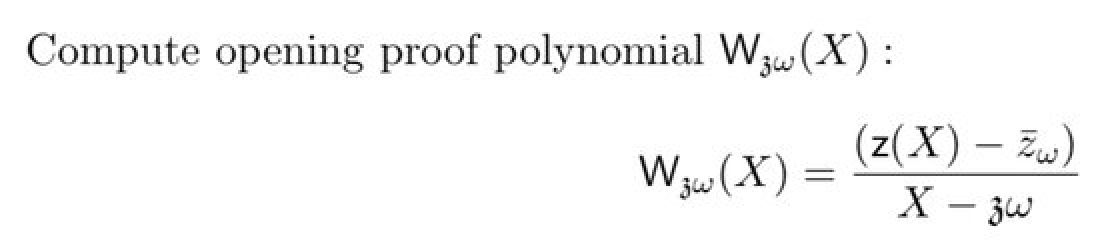

In the polynomial above, within the composition polynomial you might have noticed the value $\bar{z_{\omega}}$. It is the permutation polynomial $Z$ evaluated at $z \omega$. The only explanation I have of why you need that is in my video on the permutation of PlonK. Since the evaluation point ($z \omega$) is different from the first evaluation proof, we need a separate evaluation proof for that one (unfortunately).

The output is the pair of evaluation proofs. That's it!

Comments

rod9

I'm a little skeptical about PlonK. It seems like a very complex technology, and I'm not sure if it's really necessary. I think we should focus on improving the existing technologies, like STARKs, before we move on to something new.

betranW

I'm sick of hearing about PlonK and STARKs. They're both just buzzwords to me. I want to see real products and real applications that are built on top of these technologies. Until then, I'm not going to get excited about them.

Mainxyz

PlonK is the future! It's more efficient and scalable than STARKs, and it's going to revolutionize the way we use blockchains. I'm so excited to see what developers can build with this technology.

Rishabh Gupta

While revising the blog, I was not clear of this line "Note that the prover could evaluate everything if they wanted to, which would let the verifier compute the entire check "in the clear". But doing that means that the proof is larger, and there are more evaluation proofs to aggregate. It's a tradeoff, that might pay off if you also want to implement recursive zero-knowledge proofs (which require a verifier implemented in a circuit)."

So if the prover evaluates everything then why is the proof large? Also, what are evaluation proofs and where are they aggregated?

Rishabh Gupta

Got a definition of evaluation proof, they are just proofs of opening.

Soumya

In the first opening proof, we have (r(X) + (v(a(X)-a_bar) +......)/(X-z)

But the opening proofs are of type f(x)-f(z)/(x-z), so how does this compare over here?

leave a comment...