How do people find bugs? posted November 2020

You might wonder how people find bugs. Low-hanging fruit bugs can be found via code review, static analysis, dynamic analysis (like fuzzing), and other techniques. But what about deep logic bugs. Those you can’t find easily. Perhaps the protocol implemented is quite complicated, or correctness is hard to define, and edge-cases hard to detect. One thing I’ve noticed is that re-visiting protocols are an excellent way to find logic bugs.

Ian Miers once said something like that: "you need time, expertise, and meaningful engagement”. I like that sentence, although one can point out that these traits are closely linked--you can’t have meaningful engagement without time and expertise--it does show that finding bugs take "effort".

OK. Meaningful engagement can lead to meaningful bugs, and meaningful bugs can be found at different levels. So you're here, seating in your undies in the dark, with a beer on your side and some uber eats lying on the floor. Your computer is staring back at you, blinking at a frequency you can't notice, and waiting for you to find a bug in this protocol. What do you do? Perhaps the protocol doesn't have a proof, and this leads you to wonder if you can write one for it...

It worked for Ariel Gabizon, who in 2018 found a subtle error in a 2013 zk-SNARK paper used by the Zcash cryptocurrency he was working on. He found it by trying to write a proof for the paper he was reading, realizing that the authors had winged it. While protocols back in the days could afford to wing it, these days people are more difficult--they demand proofs. The bug Ariel found could have allowed anyone to forge an unlimited amount of money undetected. It was silently fixed months later in an upgrade to the network.

Ariel Gabizon, a cryptographer employed by the Zcash Company at the time of discovery, uncovered a soundness vulnerability. The key generation procedure of [BCTV14], in step 3, produces various elements that are the result of evaluating polynomials related to the statement being proven. Some of these elements are unused by the prover and were included by mistake; but their presence allows a cheating prover to circumvent a consistency check, and thereby transform the proof of one statement into a valid-looking proof of a different statement. This breaks the soundness of the proving system.

What if the protocol already had a proof though? Well that doesn't mean much, people enjoy writing unintelligible proofs, and people make errors in proofs all the time. So the second idea is that reading and trying to understand a proof might lead to a bug in the proof. Here's some meaningful engagement for you.

In 2001, Shoup revisited some proofs and found some darning gaps in the proofs for RSA-OAEP, leading to a newer scheme OAEP+ which was never adopted in practice. Because back then, as I said, we really didn't care about proofs.

[BR94] contains a valid proof that OAEP satisfies a certain technical property which they call “plaintext awareness.” Let us call this property PA1. However, it is claimed without proof that PA1 implies security against chosen ciphertext attack and non-malleability. Moreover, it is not even clear if the authors mean adaptive chosen ciphertext attack (as in [RS91]) or indifferent (a.k.a. lunchtime) chosen ciphertext attack (as in [NY90]).

Later in 2018, a series of discoveries on the proofs for the OCB2 block cipher quickly led to practical attacks breaking the cipher.

We have presented practical forgery and decryption attacks against OCB2, a high-profile ISO-standard authenticated encryption scheme. This was possible due to the discrepancy between the proof of OCB2 and the actual construction, in particular the interpretation of OCB2 as a mode of a TBC which combines XEX and XE.

We comment that, due to errors in proofs, ‘provably-secure schemes’ sometimes still can be broken, or schemes remain secure but nevertheless the proofs need to be fixed. Even if we limit our focus to AE, we have many examples for this, such as NSA’s Dual CTR [37,11], EAX-prime [28], GCM [22], and some of the CAESAR submissions [30,10,40]. We believe our work emphasizes the need for quality of security proofs, and their active verification.

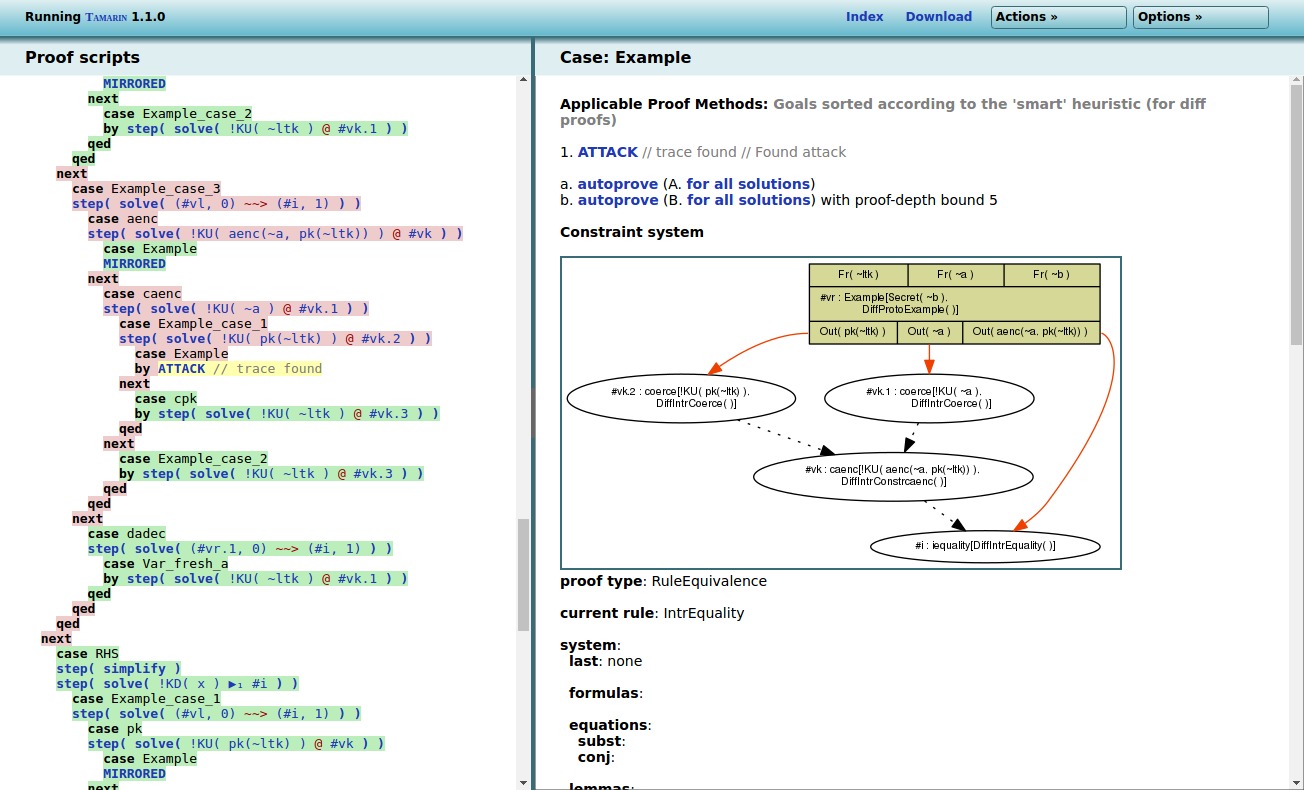

Now, reading and verifying a proof is always a good idea, but it’s slow, it’s not flexible (if you change the protocol, good job changing the proof), and it’s limited (you might want to prove different things re-using parts of the proofs, which is not straight forward). Today, we are starting to bridge the gap between pen and paper proofs and computer science: it is called formal verification. And indeed, formal verification is booming, with a number of papers in the recent years finding issues here and there just by describing protocols in a formal language and verifying that they withstand different types of attacks.

We implement our improved models in the Tamarin prover. We find a new attack on the Secure Scuttlebutt Gossip protocol, independently discover a recent attack on Tendermint’s secure handshake, and evaluate the effectiveness of the proposed mitigations for recent Bluetooth attacks.

Seems Legit: Automated Analysis of Subtle Attacks on Protocols that Use Signatures:

We implement our models in the Tamarin Prover, yielding the first way to perform these analyses automatically, and validate them on several case studies. In the process, we find new attacks on DRKey and SOAP’s WS-Security, both protocols which were previously proven secure in traditional symbolic models.

But even this kind of techniques has limitation! (OMG David when will you stop?)

In 2017 Matthew Green wrote:

I don’t want to spend much time talking about KRACK itself, because the vulnerability is pretty straightforward. Instead, I want to talk about why this vulnerability continues to exist so many years after WPA was standardized. And separately, to answer a question: how did this attack slip through, despite the fact that the 802.11i handshake was formally proven secure?

He later writes:

The critical problem is that while people looked closely at the two components — handshake and encryption protocol — in isolation, apparently nobody looked closely at the two components as they were connected together. I’m pretty sure there’s an entire geek meme about this.

pointing to the "2 unit tests. 0 integration tests." joke.

He then recognizes that it’s a hard problem:

Of course, the reason nobody looked closely at this stuff is that doing so is just plain hard. Protocols have an exponential number of possible cases to analyze, and we’re just about at the limit of the complexity of protocols that human beings can truly reason about, or that peer-reviewers can verify. The more pieces you add to the mix, the worse this problem gets. In the end we all know that the answer is for humans to stop doing this work. We need machine-assisted verification of protocols, preferably tied to the actual source code that implements them. This would ensure that the protocol actually does what it says, and that implementers don’t further screw it up, thus invalidating the security proof.

Well, Matthew, we do have formally generated code! HACL* and fiat-crypto are two examples. Anybody has heard of that failing? I’d be interested…

In any case, what’s left for us? A lot! Formally generated code is hard, and generally covers small parts of your protocol (e.g. field arithmetic for elliptic curves). So what else can we do? Implementing the protocol, if it hasn’t been implemented before, is a no-brainer. In 2016, Taylor Hornby an engineer at Zcash wrote about a bug he found while implementing the zerocash paper into the Zcash cryptocurrency:

In this blog post, we report on the security issues we’ve found in the Zcash protocol while preparing to deploy it as an open, permissionless financial system. Had we launched Zcash without finding and fixing the InternalH Collision vulnerability, it could have been exploited to counterfeit currency. Someone with enough computing power to find 128-bit hash collisions would have been able to double-spend money to themselves, creating Zcash out of thin air.

Perhaps re-implementing the protocol in a different language might work as well?

One last thing, most of the code out there is not formally verified. So of course, reviewing code works, but you need time, expertise, money, etc. So instead, what about testing? This is what Wycheproof does by implementing a number of test vectors that are known to cause issues:

These observations have prompted us to develop Project Wycheproof, a collection of unit tests that detect known weaknesses or check for expected behaviors of some cryptographic algorithm. Project Wycheproof provides tests for most cryptographic algorithms, including RSA, elliptic curve crypto and authenticated encryption. Our cryptographers have systematically surveyed the literature and implemented most known attacks. We have over 80 test cases which have uncovered more than 40 bugs. For example, we found that we could recover the private key of widely-used DSA and ECDHC implementations.

In all of that, I didn't even talk about the benefits of writing a specification... that's for another day.

Comments

bertrand

That is brilliant! I did not know of all these attacks. Can one say that zcash shouldn't be used then? Or is it more secure due to these findings?

ange

writing another implementation is definitely something that helps... as opposed to what Daira says.

Mingde

Great article! It makes me wonder whether it is possible or not to automatically detect potential side-channel attacks in these protocols via methods like formal verification, model checking and things like that.

Then I found this one related: https://dl.acm.org/doi/10.1145/3319535.3354250

But maybe there are more...

leave a comment...